Why have we been making changes to the GigaOm Key Criteria and Radar reports?

We are committed to a rigorous, defensible, consistent, coherent framework for assessing and evaluating enterprise technology solutions and vendors. The scoring and framework changes we’ve made are directed toward this effort to make our assessments verifiable, ground them in agreed concepts, and ensure that scoring is articulated, inspectable, and repeatable.

This adjustment is designed to make our evaluations more consistent and coherent, which makes it easier for vendors to participate in research and results in clearer reports for end-user subscribers.

What are the key changes to scoring?

The biggest change is to the feature and criteria scoring in the tables of GigaOm Radar reports. Scoring elements are weighted as they have been in the past, but we do so in a more consistent and standardized fashion between reports. The goal is to focus our assessment scope on the specific key features, emerging features, and business criteria identified as decision drivers by our analysts.

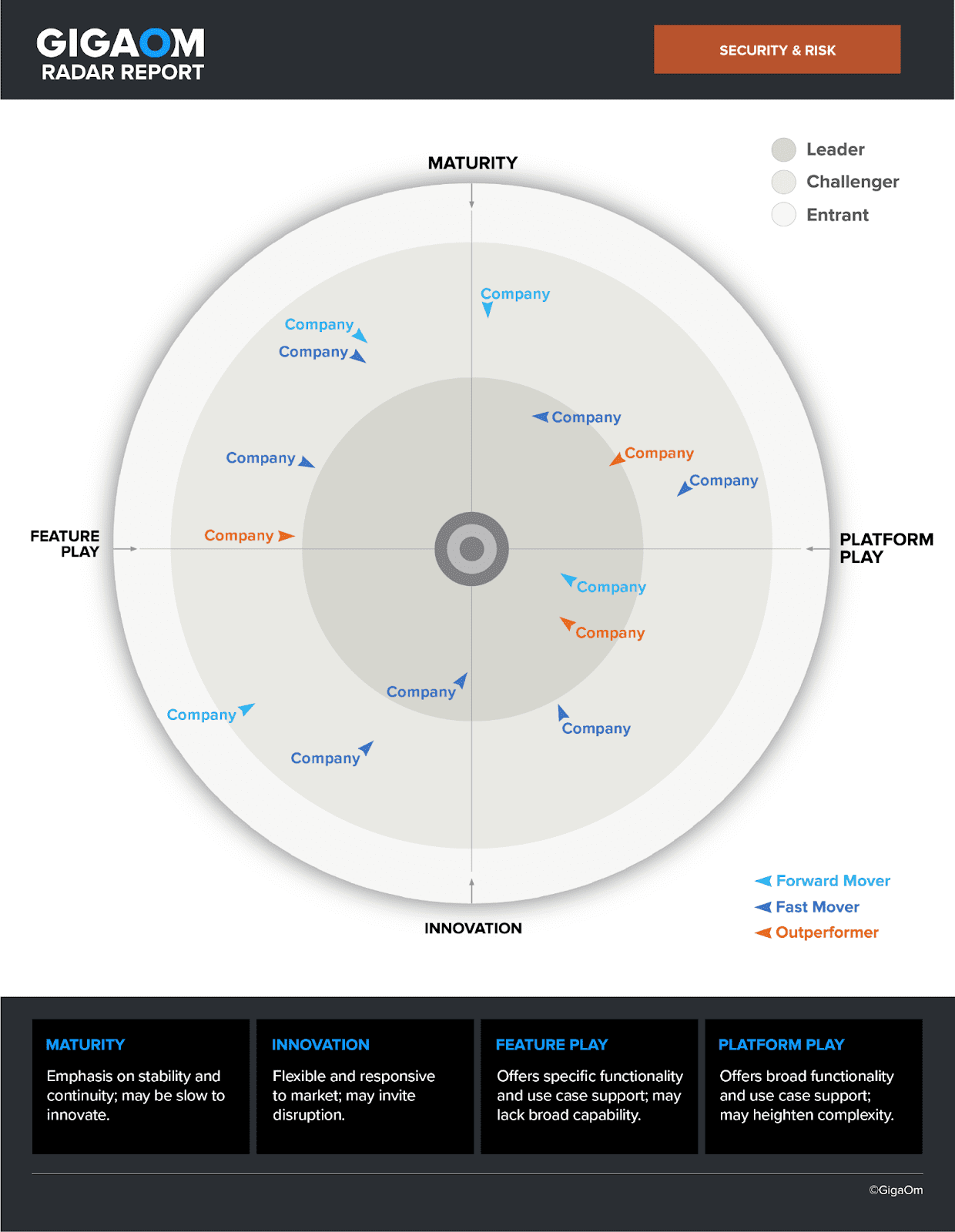

Scoring of these features and criteria determines the plotted distance from the center for vendors in the Radar chart. We are extending our scoring range from a four-point system (0, 1, 2, or 3) to a six-point scoring system (0 through 5). This enables us to recognize truly exceptional products against those that are just very good. It affords us greater nuance in scoring and better informs the positioning of vendors on the Radar chart.

Determining vendor position along the arc of the Radar chart has been refined as well. Analysts previously were asked to determine where they believed solutions should be positioned on the radar—first, to determine if they should occupy the upper (Maturity) or lower (Innovation) hemisphere, then to identify position left-to-right, from Feature Play to Platform Play. Similar to how we’ve extended our feature and criteria scoring, the scheme for determining quadrant position is now more granular and grounded. Analysts must think about each aspect individually—Innovation, Maturity, Feature Play, Platform Play—and score each vendor solution’s alignment accordingly.

We have now adapted how we plot solutions along the arc in our Radar charts, ensuring that the data we’re processing is relevant to the purchase decision within the context of our reports. Our scoring focuses primarily on key differentiating features and business criteria (non-functional requirements), then, to a lesser extent, on emerging features that we expect to shape the sector going forward.

For example, when you look at Feature Play and Platform Play, a feature-oriented solution is typically focused on going deeper, perhaps on functionality, or on specific use cases or market segments. However, this same solution could also have very strong platform aspects, addressing the full scope of the challenge. Rather than deciding one or the other, our system now asks you to provide an independent score for each.

Keep in mind, these aspects exist in pairs. Maturity and Innovation are one pair, and Feature and Platform Play the other. One constraint is that paired scores cannot be identical—one “side” must be higher than the other to determine a dominant score that dictates quadrant residence. The paired scores are then blended using a weighted scheme to reflect the relative balance (say, scores of 8 and 9) or imbalance (like scores of 7 and 2) of the feature and platform aspects. Strong balanced scores for both feature and platform aspects will yield plots that tend toward the y-axis, signifying an ideal balance between the aspects.

But you have to make a choice, right?

Yes, paired scores must be unique; the analysts must choose a winner. It’s tough, but in those situations, they will be giving scores like 6 and 5 or 8 and 7, which will typically land them close to the middle between the two aspects. You can’t have a tie, and you can’t be right on the line.

Is Platform Play better than Feature Play?

We talk about this misconception a lot! The word “platform” carries a lot of weight and is a loaded term. Many companies market their solutions as platforms even when they lack aspects we judge necessary for a platform. We actually considered using a term other than Platform Play but ultimately found that platform is the best expression of the aspects we are discussing. So, we’re sticking with it!

One way to get clarity around the Platform and Feature Play concepts is to think in terms of breadth and depth. A platform-focused offering will feature a breadth of functionality, use-case engagement, and customer base. A feature-focused offering, meanwhile, will provide depth in these same areas, drilling down on specific features, use cases, and customer profiles. This can help reason through the characterization process. In our assessments, we ask, “Is a vendor deepening its offering on the feature side, or are there areas it intentionally doesn’t cover and instead relies on third-party integrations?” Ultimately, think of breadth and depth as subtitles for Platform Play and Feature Play.

The challenge is helping vendors understand the concept of platform and feature and how it is applied in scoring and evaluating products in GigaOm Radar reports. These are not expressions of quality but character. Ultimately, quality is expressed by how far each plot is from the center—the closer you are to that bullseye, the better. The rest is about character.

Vendors will want to know: How can we get the best scores?

That’s super easy—participate! When you get an invite to be in our research, respond, fill out the questionnaire, and be complete about it. Set up a briefing and, in that briefing, be there to inform the analyst and not just make a marketing spiel. Get your message in early. That will enable us to give your product the full attention and assessment it needs.

We cannot force people to the table, but companies that show up will have a leg up in this process. The analysts are informed, they become familiar with the product, and that gives you the best chance to do well in these reports. Our desk research process is robust, but it relies on the quality of your external marketing and whatever information we uncover in our research. That creates a potential risk that our analysis will miss elements of your product.

The other key aspect is the fact-check process. Respect it and try to stay in scope. We see companies inserting marketing language into assessments or trying to change the rules of what we are scoring against. Those things will draw focus away from your product. If issues need to be addressed, we’ll work together to resolve them. But try to stay in scope and, again, participate, as it’s your best opportunity to appeal before publication.

Any final thoughts or plans?

We’re undergirding our scoring with structured decision tools and checklists—for example, to help analysts determine where a solution fits on the Radar—that will further drive consistency across reports. It also means that when we update a report, we can assess against the same rubric and determine what changes are needed.

Note that we aim to update Key Criteria and Radar reports based on what has changed in the market. We’re not rewriting the report from scratch every year; we’d rather put our effort into evaluating changes in the market and with vendors and their solutions. As we take things forward, we will seek more opportunities for efficiency so we can focus our attention on where innovation comes from.

The post What’s the Score? appeared first on Gigaom.